Artificial intelligence (AI) can currently be observed doing one giant leap after the other, with Deep Learning being the latest buzzword. Remember Google’s DeepDream, which supposedly showed what androids dream of, by running algorithmic pareidolia (seeing faces in patterns) through a convolutional neural network. Or Google’s AlphaGo, which beat Korean grandmaster Lee Sedol in a historic Go tournament. These artificial intelligences are powerful because they are so deeply layered that it becomes harder and harder to read the faint signals echoing back to us surface dwellers from the bottom of their oceanic minds. All the major corporations – Google, Facebook, Microsoft, Baidu, Amazon, Apple, IBM, etc. – are currently remodeling the foundations of their entire infrastructure to sit on and train Deep Learning – in Google’s case aptly called “DistBelief.”

Every search request, every uploaded and tagged image, literally all input into the internet is currently training – or, one could say, educating – layer upon layer of algorithms. The minuscule surfaces – a trippy looking DeepDream image or a televised game of Go – again cover a massive structural shift in which the better part of reading, writing, and processing is done machine to machine, while humans play merely a secondary role as feeders, content providers, and caretakers. Google has released the underlying software library for machine learning in various kinds of perceptual and language-understanding tasks called TensorFlow as open-source – which turned the very idea of open-source upside down. Google has simply significantly expanded their user- and data bases and gets free feedback and debugging. This is not an artificial intelligence on the loose on the net, yet, but still a significant step, which has to be taken with care.

Following Google, the Chinese internet giant Baidu released their machine learning toolkit Paddle (Parallel Distributed Deep Learning) as open-source, marking a global infrastructural shift in the way deep learning is being taken seriously and employed to solve, among other things, the problem of evaluation of vast amounts of complex data collected by companies and intelligence services alike. Facebook is also increasingly investing in special-purpose hardware (not to speak of their Aquila drones and Terragraph project for global internet access … read: more data to work with) under the name FAIR (Facebook Artificial Intelligence Research).

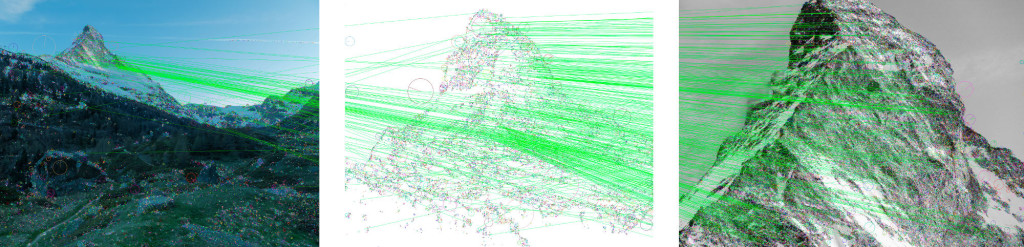

Trevor pulls up a set of blurry images, which, to the human eye, are hard to interpret: blobs of color, fuzzy borders, not much contrast and shape. “This is going to be the artificial intelligence world. It’s object recognition and this is a training set.” They have been using ImageNet, a gigantic database of images for research purposes, currently holding over 14 million images. “I don’t know how many classifiers within categories of things are in it. This is where it’s trying to interpret what’s in an image, and this would be really what’s going on in the background of Facebook and Google.

When you are training an artificial intelligence you’d say that here’s 10,000 pictures of a goldfish, here’s 10,000 pictures of the sun, here’s 10,000 pictures of an orange. Figure out what the difference is and what it will do internally is build up almost an archetype of what it thinks this object should look like probabilistically and this is the archetype, for example, of a goldfish.” What Trevor calls the “archetype” has come a long way from Jungian Gestalt concepts. Computational archetypes, which, as Trevor explains, are calculated into being because “for every pixel in the picture of a goldfish, they determine that this pixel has a ninety percent probability of being this luminosity with this color. This is an incredibly precise image. Facebook algorithms have DeepFace where they do facial recognition and at this point has much better accuracy at identifying faces or human figures than an actual human does, and the reason why their algorithms are so good is because they have all the data. And billions of people train in that dataset – every time you tag a picture.”

We move to another workstation and run it; it watches us through the webcam and in a matrix of frames of neurons shows all the steps and layers of cognition and processing in the neural net. We’re both wearing black T-shirts which the software interprets as bulletproof vests. We spend quite some time moving in front of the camera and exchanging gazes with this new kind of being, with its blinking neurons and the way it looks at us.

In 1936, around the same time mathematician and marathon runner Alan M. Turing asked whether machines could think and set out to implement this question in the form of the very machine that is now looking at Trevor and me, psychoanalyst and/or dandy madman Jacques Lacan presented his concept of the “mirror stage.” Between the age of six and eighteen months, an infant begins to grasp its own constitution as a subject, by recognizing itself as a whole in the mirror.

Before that, it is disject, fragmented, dismembered – it exists, but only as a loose assembly of its parts. In its mirror image, it becomes an individual, its parts join together as a functional, centrally controlled unit, yet only in what we can call “real virtuality”: It forms an ego, only to be shattered into fragments again immediately. It oscillates between being and non-being – which, according to Lacan, is the very condition of the subject, the flickering scansion of Being in the imaginary order: present, absent. Mirrored, again, in the digital on and off.

Some decades later, in 1990, a man whose name sounds like a clumsy voice recognition version of Jacques Lacan – Yann LeCun (with a co-author named John S. Denker) – wrote a scientific paper at AT&T Bell Laboratories on a specific optimization idea for neural nets. It was called “Optimal Brain Damage” and its author now leads all of Facebook’s Artificial intelligence Research. This optimally damaged brain – a brain with all redundant neural connections chopped off, a highly specialized brain – is trying to perceive us, now, in 2016. Specific neurons are looking for specific things they have been trained on – goldfish, sun, shower cap, bulletproof vest. It clearly is constituting itself as something – perhaps not an ego, but whoever said that was necessary.

“Google DeepDream is a kind of mystification precisely because it’s turning these processes back into an image while not actually talking about what’s going on behind DeepDream. It is one part of a much bigger infrastructure that’s designed to extract as much profit out of the micro-interactions of everyday life as possible. It doesn’t need to compute, it needs to make profit. We have to think about the kind of micro-interactions that capitalism has not been able to invade yet. Capitalism has not totally been able to invade your family sitting around, sharing family photographs with each other, right?

But now it has completely and it’s able to extract value from even smaller slices of human life on one hand, and from even more intimate social relations. And it’s able to quantify them, financialize them. What’s particularly insidious about it is that big data really capitalizes on deeply human relations. For example, everybody wants to share pictures of their children with their friends. I can’t imagine a more human thing to want to do, so Facebook capitalizes on that but that becomes totally weaponized and the last thing you should be doing is putting images of children on Facebook.”